Tuning search engines to return the best results for users is a constant process of experimentation, measurement and adjustment. We hope that we have enough data about what results users prefer – perhaps derived from which results they click on – but if not we often have to create our own, making judgements about which results are relevant. We use this data to test each new configuration, and iterate until we achieve a satisfoctory metric.

Many search engines don’t provide the tooling required to carry out this tuning process. A long time Lucene contributor recently admitted to me than when they worked for a search engine company some years ago they didn’t spend much time on end user applications. Their world was internal, creating features on the company roadmap, rolling out that next major version – not focused on the day to day needs of those users tuning their search in a fast changing world.

The right tools for the job

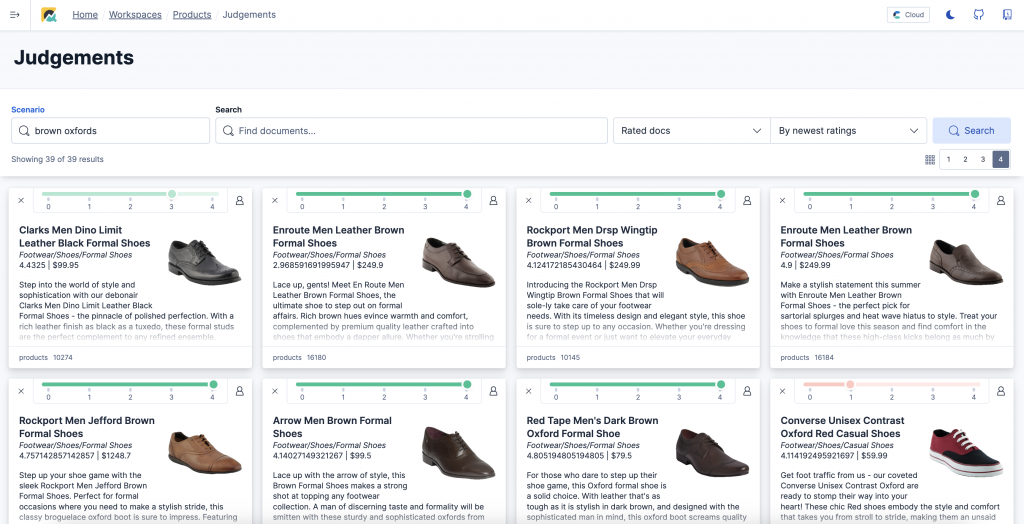

Although most search engine suppliers offer consulting and support for their (paying) users, more often this is provided by external consultants (hello!) and agencies, who sometimes end up building extra tooling and processes to do so. A very good example of this is Quepid, built by my ex-colleagues at OpenSource Connections, which lets you collect relevance judgements and thus measure the quality of a set of search engine queries – it works with a range of search engines including Solr, Elasticsearch, OpenSearch and Algolia and is both open source and available as a free hosted service. Here’s some manual judgements on searches for bread from a previous post, with an overall nDCG measurement for the query ‘loaf’:

Another open source example is Rated Ranking Evaluator from the Sease team, which sadly hasn’t been updated for a while, new player SearchTweak, and there are commercial tools from companies like SearchHub. This landscape of tools is somewhat patchy: not everything works with every search engine, keeping up with new versions and API changes is a huge challenge. Often the tools used for search tuning are built in-house, a collection of scripts, hacks, spreadsheets and macros – hard to maintain and not always easy to use.

New search tuning tools in OpenSearch

I’ve been working with the OpenSearch team for a few years now, both while at OpenSource Connections and afterwards (I was recently proud to be asked to become an OpenSearch Ambassador). My most recent consulting engagement has also focused on OpenSearch. This has given me an opportunity to contribute to some exciting developments around search tuning tools.

The OpenSearch team includes some people who have been in the search business almost as long as I have – they have worked at both ends of the business, as product owners or search engineers for companies using search engines and at companies creating and supporting search platforms. They thus have a unique perspective and have collaborated with the team at OpenSource Connections to create an extensive suite of tools to help users experiment with, measure and tune search – all open source, but they are also available on the AWS hosted service from version 3.1. These include:

- User Behaviour Insights (UBI) – an open standard for how to record user interactions with search results (what is clicked on, what is hovered over, what happens next) – so we can see the user’s whole search journey. As this is an open standard it will also work with any search engine – but with OpenSearch you can also import this analytic data back into an OpenSearch index, so it’s close to where you need it (rather than the more common situation where search analytic data is provided by different systems and a different team, which can be hard to get, slow and out of date). I’ve spoken about UBI at a few recent search conferences and as a champion of the project it’s hugely encouraging to see it supported in OpenSearch.

- Search Relevance Workbench (SRW) – a set of OpenSearch dashboards showing you useful metrics like zero result searches, the difference between the result lists from two different search configurations, etc. Importantly SRW can use data provided by UBI and can also run search relevance experiments : provide a set of queries and relevance judgements and you can see if a new search configuration does better than the old, with metrics including nDCG. Although it doesn’t let you create manual relevance judgements, it’s compatible with Quepid so can import these on demand.

Elasticsearch joins the search tuning party

This week also saw an announcement from an Elastic engineer that he had created Relevance Studio, which looks like a similar project to Search Relevance Workbench. It’s apparently not an ‘official’ project or Elastic product and unfortunately uses Elastic’s own not-exactly-open-source license, but it has some interesting features including an MCP server and the ability to automate the tuning lifecycle. Unlike OpenSearch’s SRW it also lets you make human relevance judgments.

I hope that Elastic see the value of this project and support it further. Compatibility with UBI and with Quepid’s export format would be a nice to have (making human judgments is tedious, so re-using them where possible is essential).

Ready to self-tune? Not quite

As someone who regularly helps companies develop processes and methods for effective search tuning, these developments are very exciting. Finally we have tuning tools as fully integrated parts of the search engine platform, at least for OpenSearch. Grab that click data, use it to figure out which configuration is best or even feed it into a ML system that learns the best combination of settings automatically.

We’re not quite at the point where we can point our search engine at some content, some example queries, a user analytics framework etc. and let it figure out how to configure itself – but we’re making some significant steps to that particular Nirvana. In the meantime we can be much more effective, spend less time hacking together internal tooling and focus more on what’s important – giving our users the very best results for their queries to drive our businesses forward.

If you’d like to find out how any of these tools could help you build better search, let me know.